A 12 Step Roadmap to Achieving ISO/IEC 42001 Certification

July 29, 2025

What is ISO/IEC 42001?

ISO/IEC 42001 is the first international standard specifically focused on Artificial Intelligence Management Systems (AIMS).

Published by the International Organization for Standardization (ISO) and the International Electrotechnical Commission (IEC), this standard provides a comprehensive framework for businesses to manage AI systems responsibly, ethically, and in alignment with regulatory expectations.

ISO/IEC 42001 offers a structured approach; whether you’re building AI technologies or using third-party AI services, to ensure transparency, fairness, accountability, and continual improvement throughout the lifecycle of your AI technologies.

What is ISO/IEC 42001?

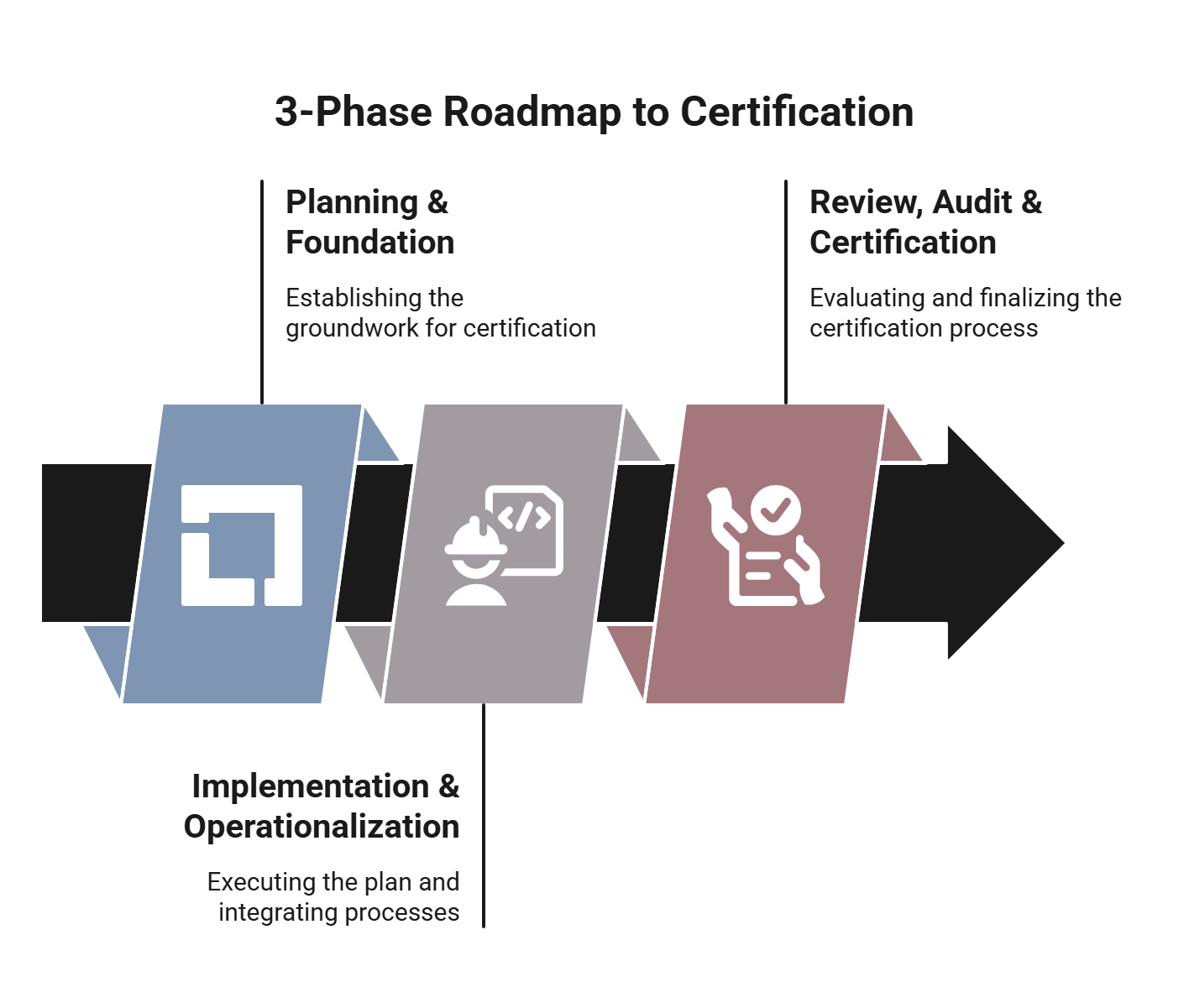

To help organizations navigate the journey toward ISO/IEC 42001 certification in a clear and structured way, the 12-step roadmap has been grouped into three distinct phases.

- Phase 1: Planning & Foundation focuses on securing executive buy-in, defining the scope, establishing governance structures, and setting up a risk management approach tailored to AI systems.

- Phase 2: Implementation & Operationalization moves from strategy to action, embedding policies into daily operations through data and model governance, transparency measures, documentation controls, and staff training. Finally,

- Phase 3: Review, Audit & Certification prepares the organization for formal evaluation, including internal audits, corrective actions, management reviews, and the external certification audit.

This phased approach makes the certification process more manageable by aligning activities with natural implementation milestones.

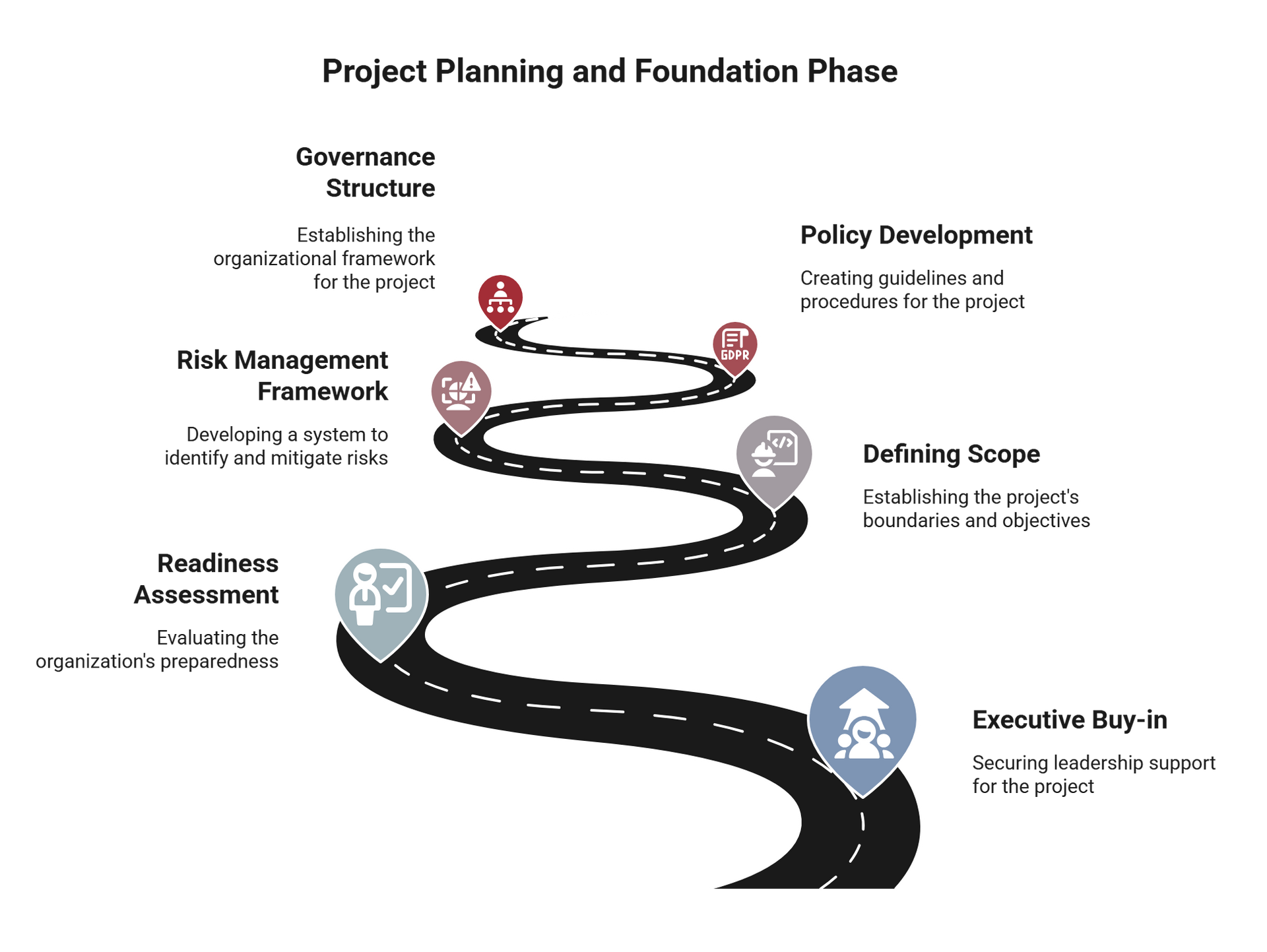

Phase 1: Planning and Foundation

This phase sets the stage for a successful implementation of an Artificial Intelligence Management System (AIMS) by aligning leadership, defining the project’s scope, and establishing the foundational structures required by ISO/IEC 42001. During this phase, organizations secure executive commitment, appoint a lead implementer, and build a cross-functional team to guide the initiative. A readiness assessment is conducted to identify gaps between current practices and the standard’s requirements, while the scope of the AIMS is clearly defined to include internal and third-party AI systems. This phase also includes developing a tailored risk management framework and drafting initial governance policies to ensure accountability, ethical AI use, and strategic alignment from the start.

To lead this critical phase effectively, organizations should assign a qualified AIMS Lead Implementer—consider enrolling in a Certified ISO/IEC 42001 Lead Implementer course

to gain the expertise needed to guide the project with confidence and ensure alignment with the standard.

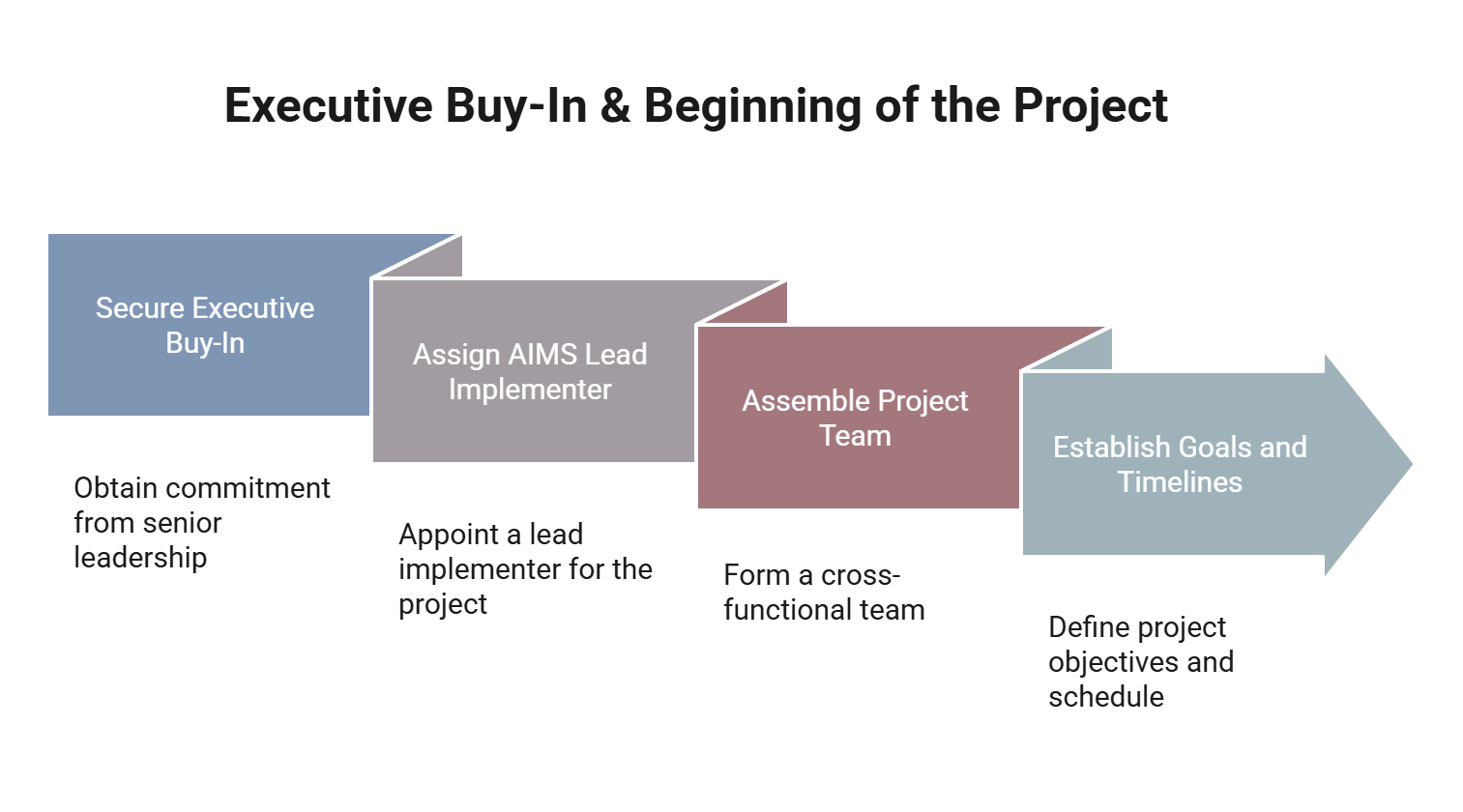

Step 1: Executive Buy-In and Beginning the Project

This first step marks the official launch of the ISO/IEC 42001 implementation journey. In this step, organizations secure commitment from senior leadership by highlighting the strategic, ethical, and regulatory importance of establishing an Artificial Intelligence Management System (AIMS). A Lead Implementer is appointed, and a cross-functional project team is assembled, bringing together key departments such as compliance, IT, HR, and legal. Clear goals, timelines, and responsibilities are defined, ensuring alignment and shared ownership from the outset.

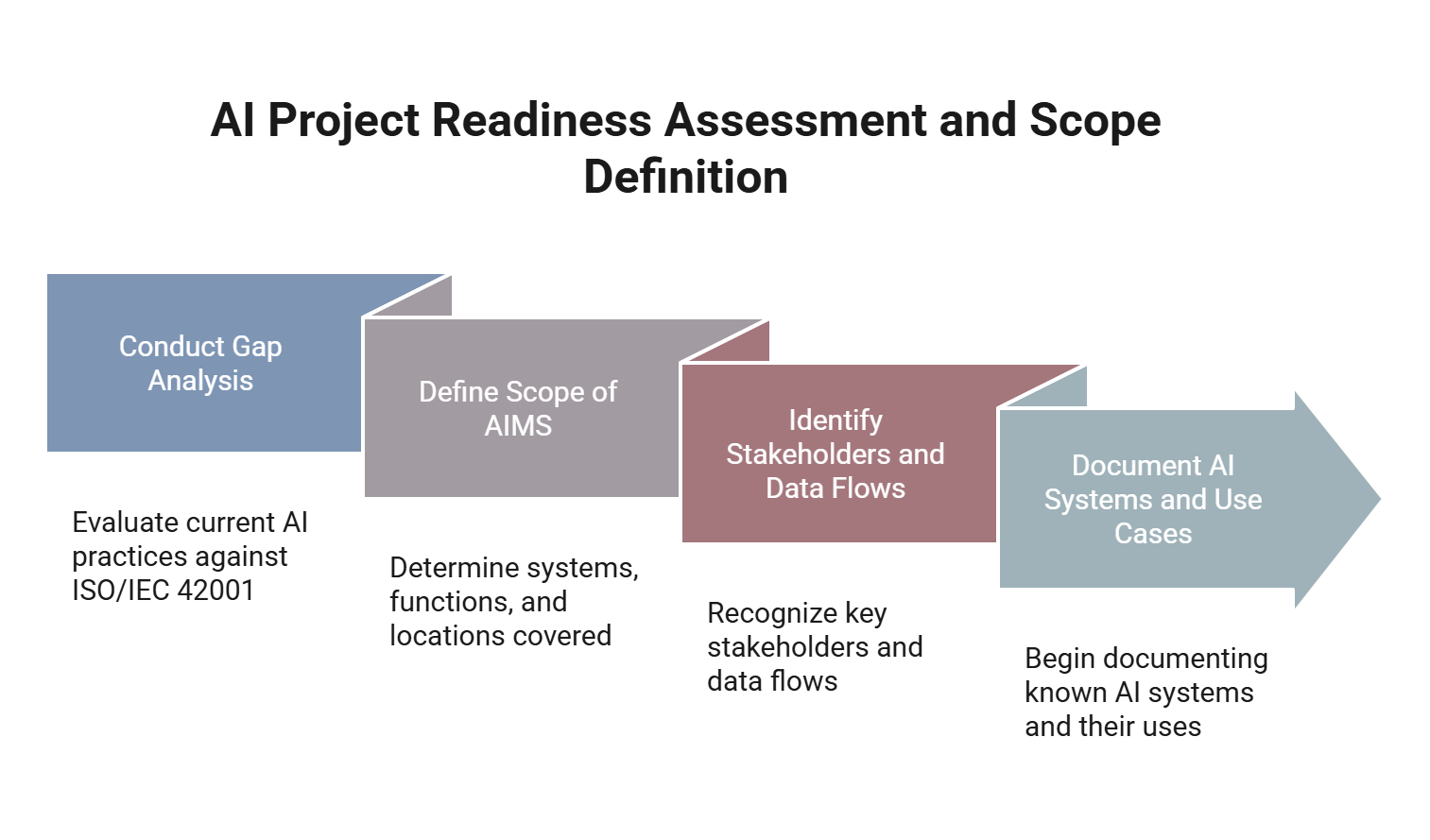

Step 2: Readiness Assessment and Defining the Scope of Your Project

This step focuses on understanding where the organization currently stands and what the AIMS will cover. A gap analysis is conducted to compare existing AI practices against the requirements of ISO/IEC 42001. This helps identify areas needing improvement and sets a baseline for the implementation. At the same time, the scope of the AIMS is defined—clarifying which systems, functions, and locations are included, including both internal and third-party AI. This step also involves identifying key stakeholders, mapping data flows, and documenting known AI systems and their use cases.

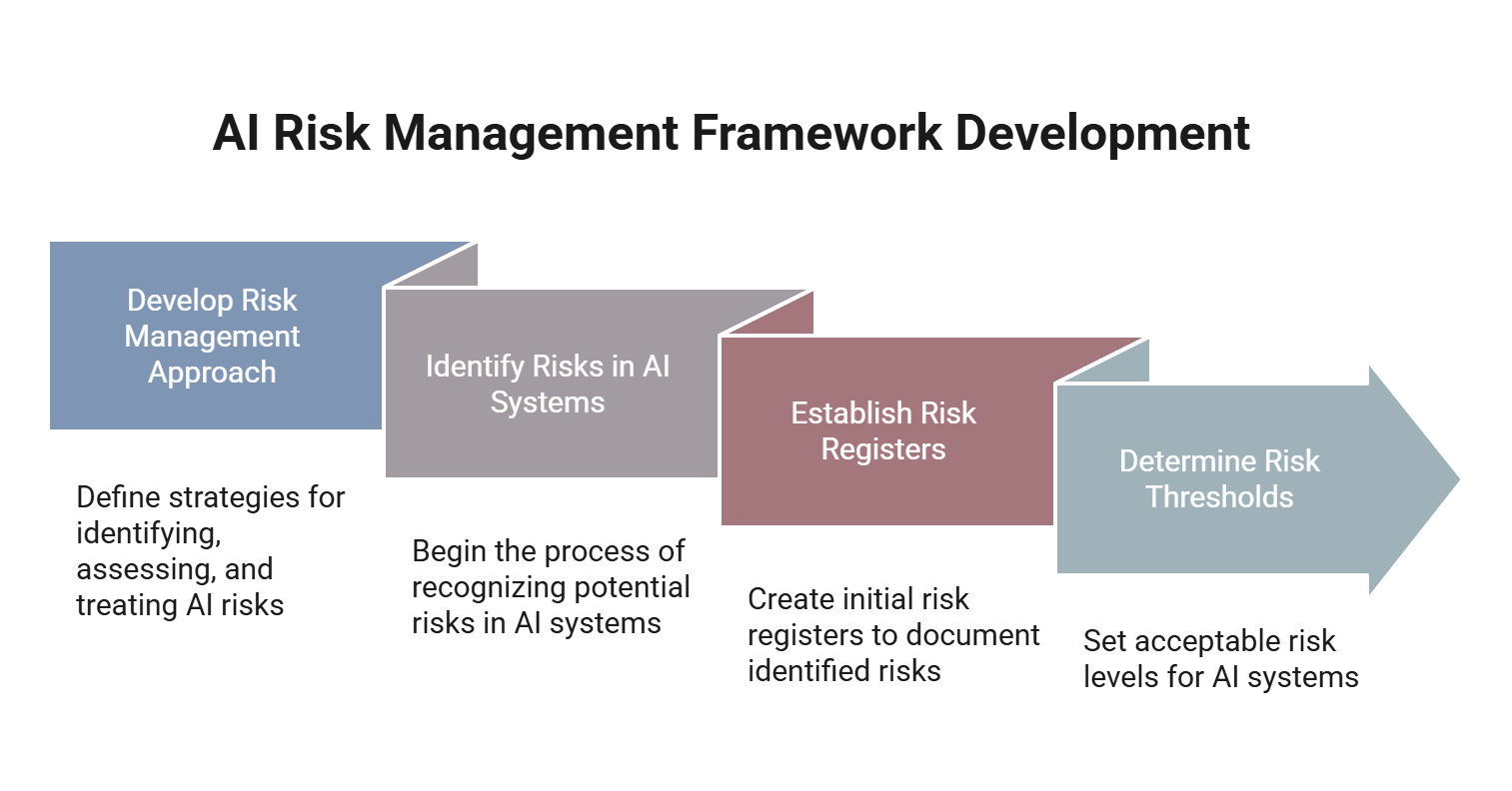

Step 3: Risk Management Framework

This step introduces a structured approach to identifying, assessing, and mitigating risks associated with AI systems. Organizations define how they will manage risks such as bias, misuse, performance drift, and ethical concerns across the AI lifecycle. This step includes developing a risk methodology tailored to AI, initiating risk assessments for existing or planned systems, and establishing risk registers. It also involves setting thresholds for acceptable risk levels, ensuring that risk treatment aligns with both organizational objectives and ISO/IEC 42001 requirements.

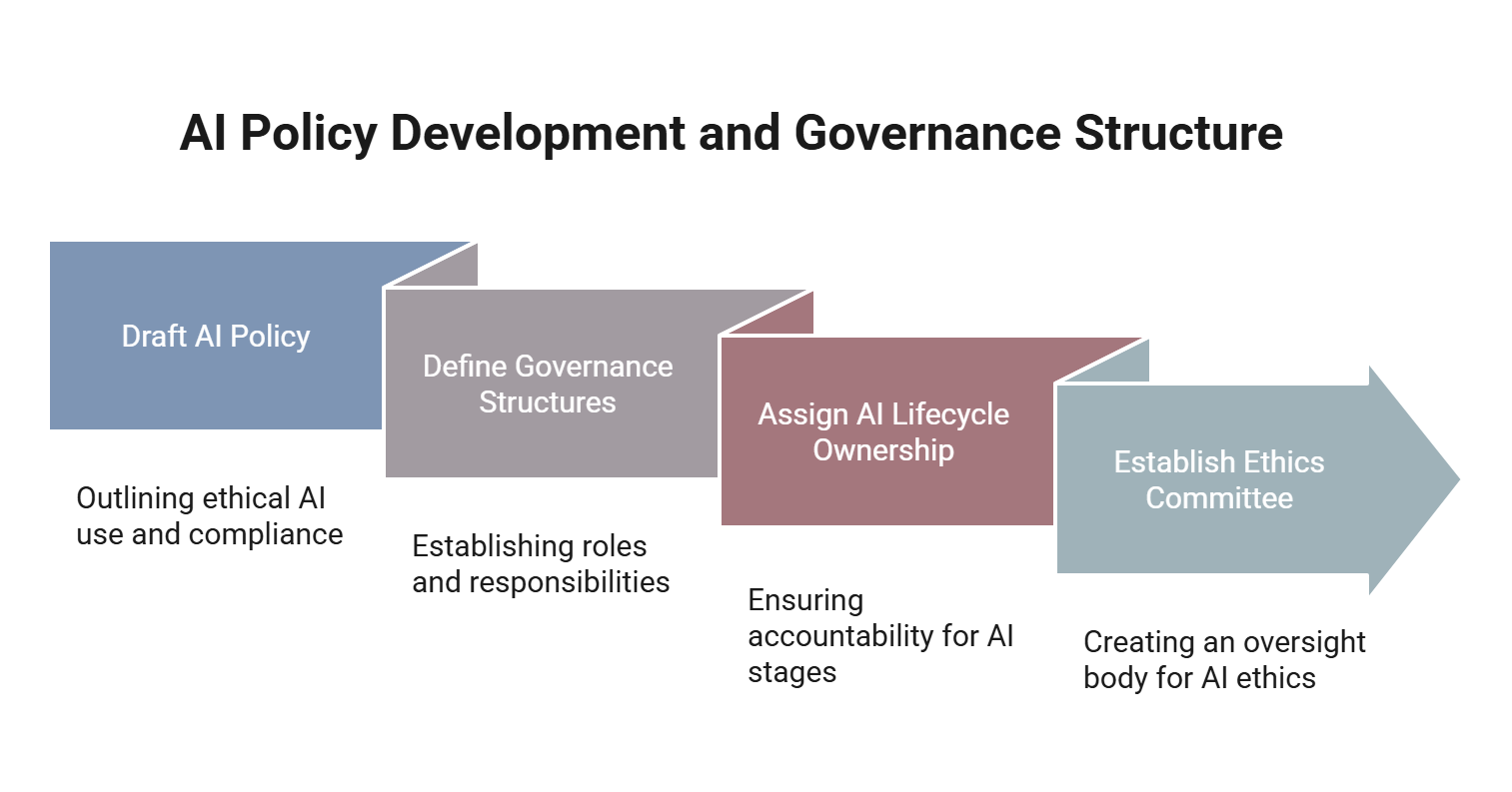

Step 4: Policy Development and Governance Structure

This step focuses on formalizing the organization's commitment to responsible AI through clear policies and defined oversight. This includes drafting an AI policy that addresses ethical principles, transparency, and compliance with legal and regulatory requirements. Governance structures are established to assign roles and responsibilities across the AI lifecycle—from development to decommissioning. Where appropriate, an AI ethics committee or internal oversight board is formed to guide decision-making and escalate concerns. This step ensures that accountability is embedded into the system from the outset.

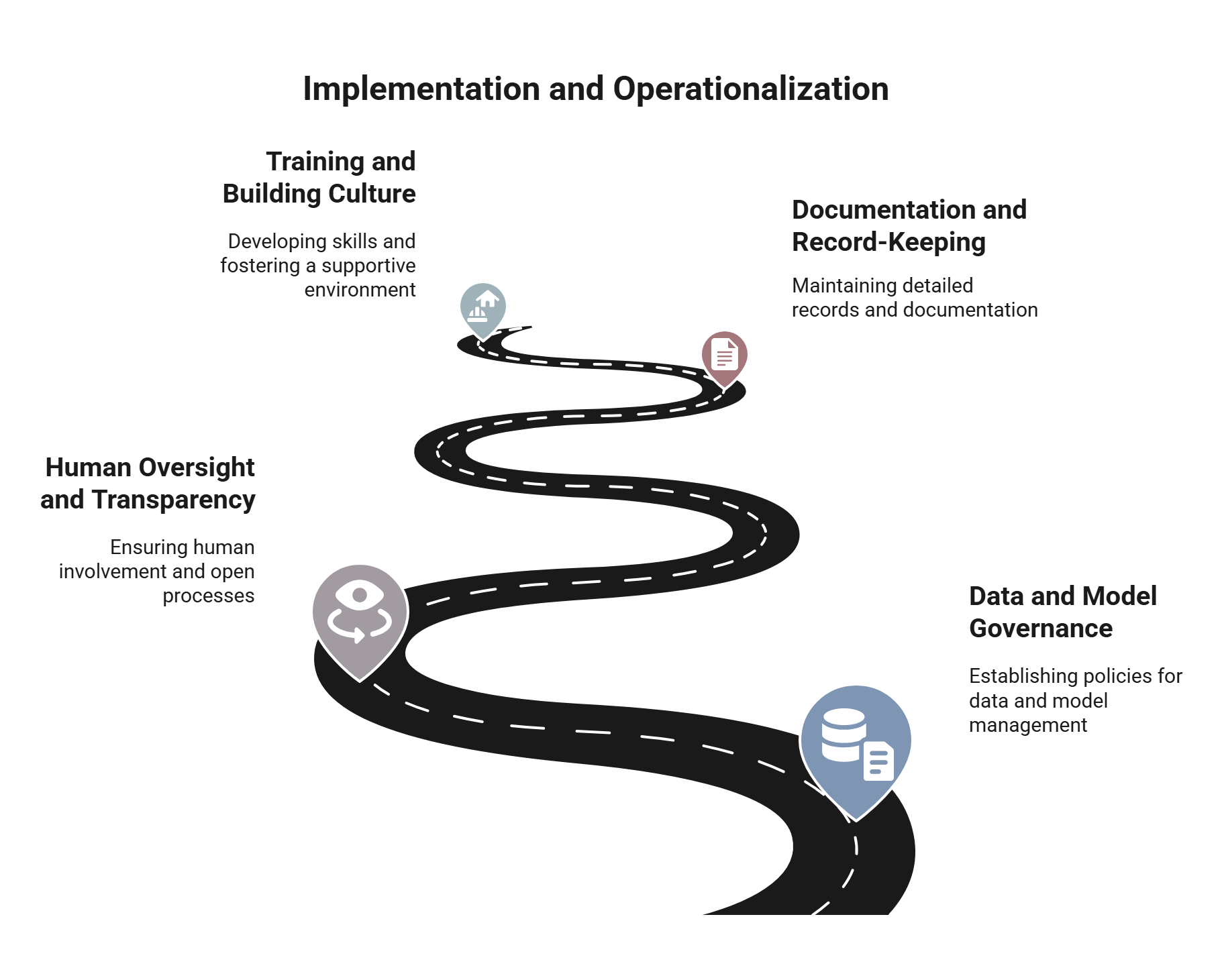

Phase 2: Implementation and Operationalization

Phase 2 focuses on putting the foundational plans into action

by embedding AI governance practices across the organization. This phase involves establishing robust data and model governance processes to ensure quality, fairness, privacy, and traceability throughout the AI lifecycle. It also includes implementing procedures for human oversight and transparency, especially for high-risk AI systems, to maintain accountability and user trust. Organizations begin organizing documentation, setting up centralized record-keeping, and rolling out targeted training programs to build awareness and competence across teams. By operationalizing the principles defined in Phase 1, this phase ensures that responsible AI practices are not only documented but actively integrated into day-to-day activities.

To gain the skills needed to lead this phase effectively and drive real organizational change, consider enrolling in a Certified ISO/IEC 42001 Lead Implementer course

and become a recognized expert in AI governance implementation.

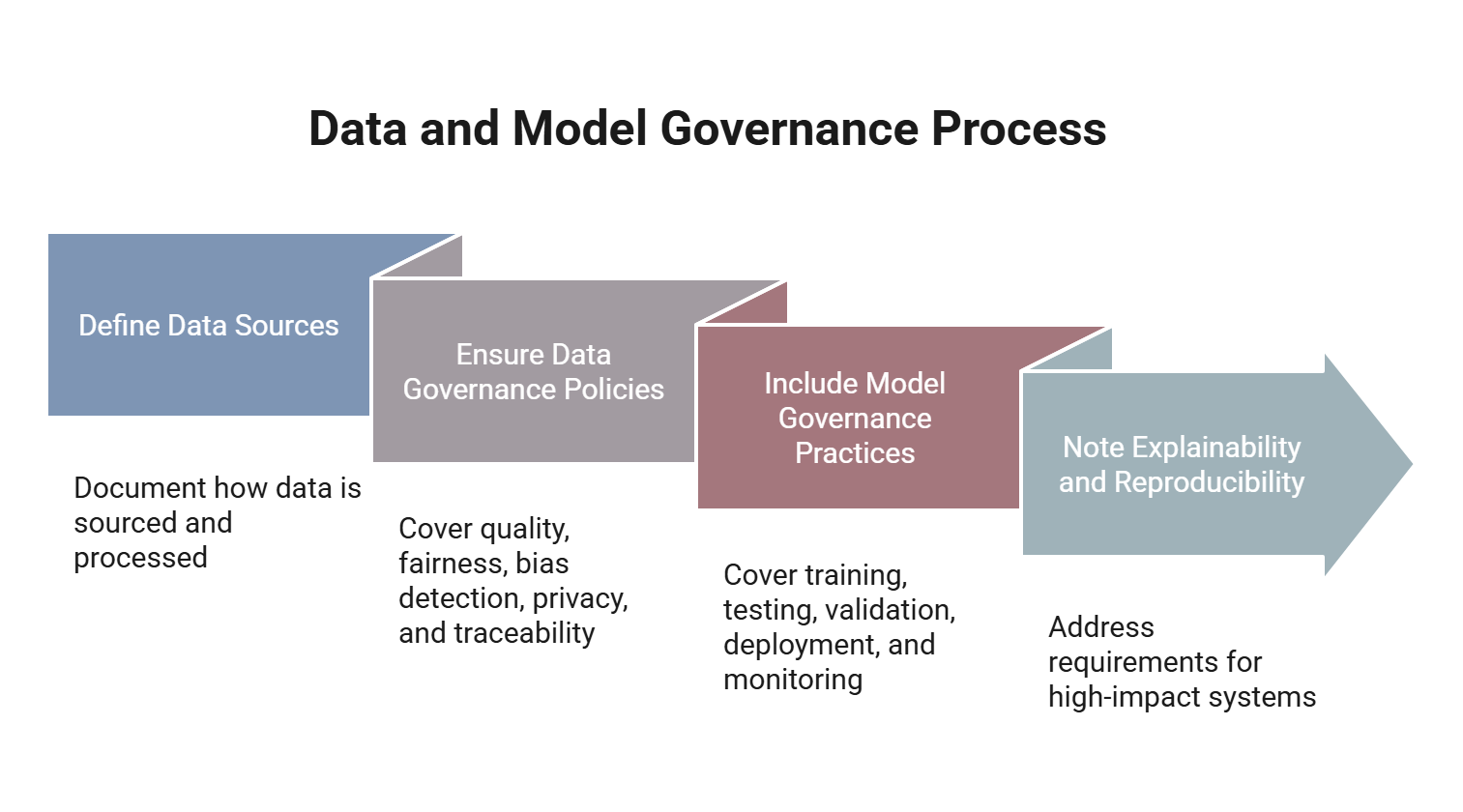

Step 5: Data and Model Governance

In this step, we ensure that the organization has strong controls over how data is sourced, processed, and used in AI systems. This step involves defining governance policies that address data quality, fairness, bias detection, privacy, and traceability. It also extends to model governance, covering how AI models are trained, validated, deployed, and monitored throughout their lifecycle. For high-impact systems, special attention is given to explainability and reproducibility, ensuring compliance with ISO/IEC 42001 and building trust in AI outcomes.

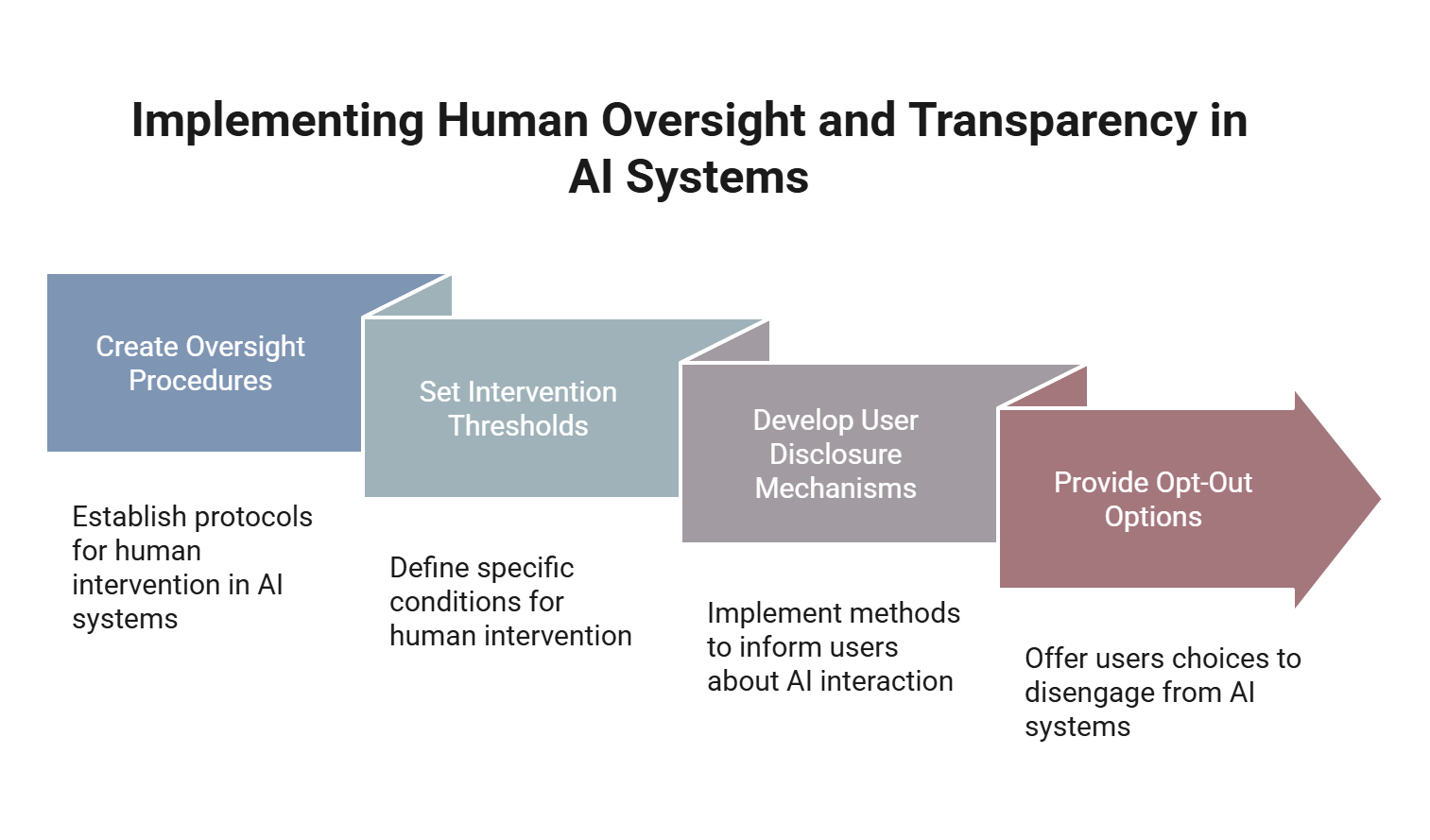

Step 6: Human Oversight and Transparency

This step focuses on ensuring that people remain in control of AI systems, especially in high-risk scenarios. This step involves defining clear procedures for when and how human intervention should occur, including thresholds for overriding AI outputs. Organizations also implement transparency measures to inform users when they are interacting with an AI system. Where appropriate, disclosures and opt-out options are provided to employees, customers, or other affected parties, reinforcing trust and accountability in AI use.

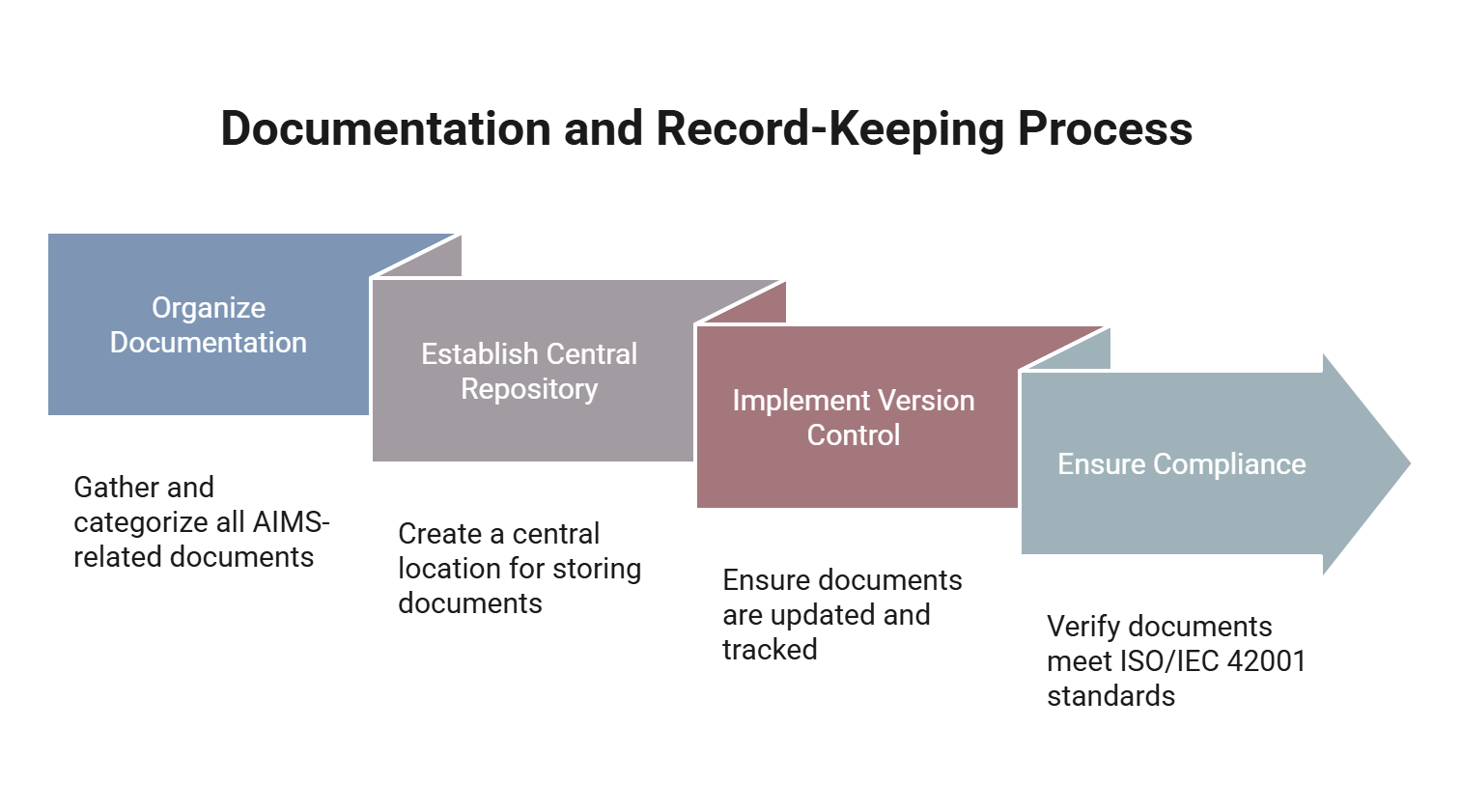

Step 7: Documentation and Record-Keeping

This step ensures that all elements of the Artificial Intelligence Management System (AIMS) are properly recorded and traceable. This includes organizing policies, procedures, risk assessments, audit logs, and training records in a centralized repository with version control. Proper documentation not only supports compliance with ISO/IEC 42001 but also provides evidence of due diligence, facilitates audits, and enables continuous improvement. Maintaining clear, accurate, and accessible records is essential for demonstrating transparency and accountability throughout the AI lifecycle.

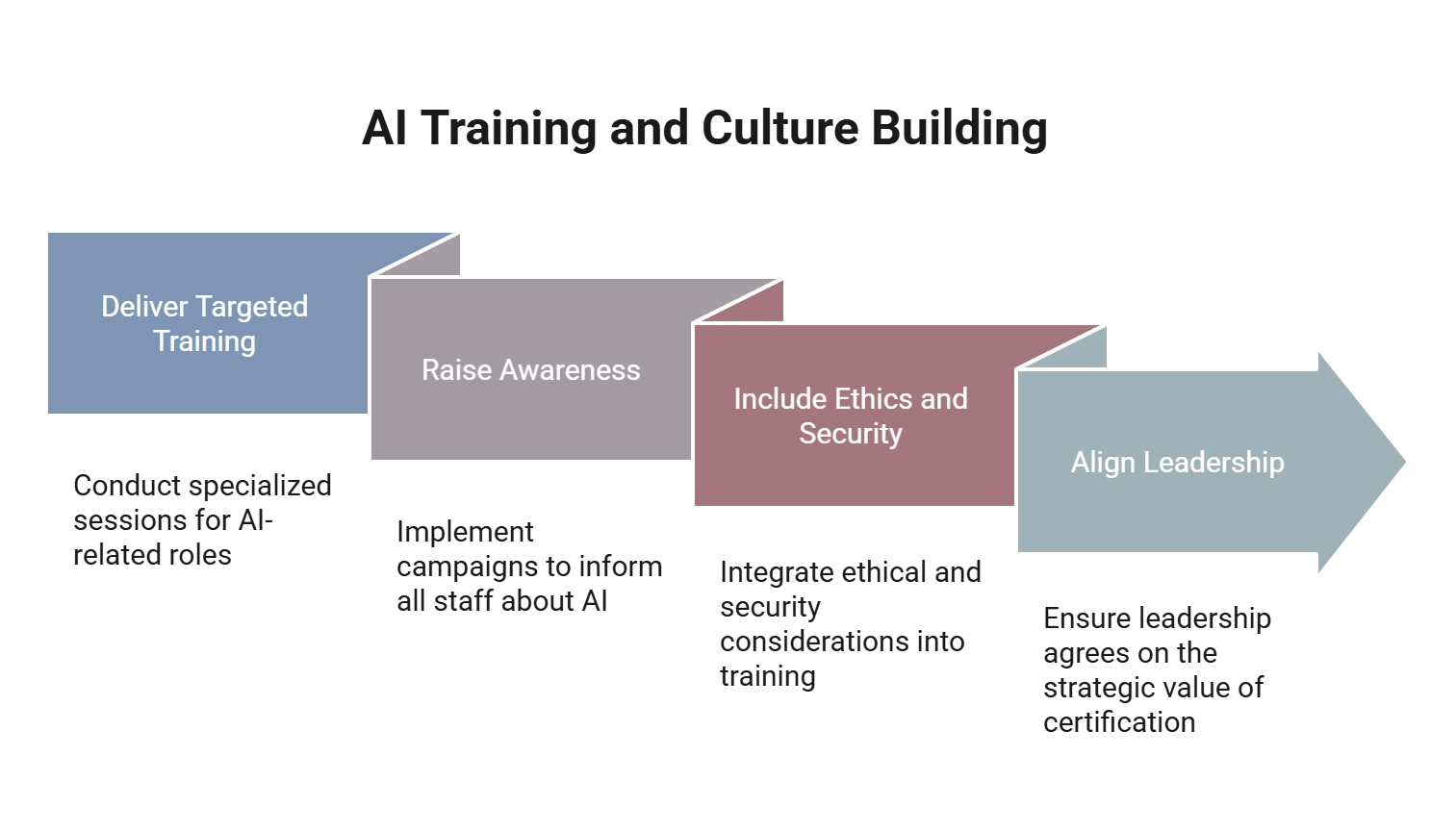

Step 8: Training and Building Culture

Here, we focus on equipping leaders & employees with the knowledge and mindset needed to support responsible AI practices.

Targeted training is delivered to teams involved in the development, deployment, and oversight of AI systems, while organization-wide awareness initiatives help embed ethical and compliant behavior into the culture. Training programs cover key topics such as AI ethics, transparency, human rights, and security. This step also ensures that leadership reinforces the strategic value of ISO/IEC 42001 certification, creating a unified vision across all levels of the organization.

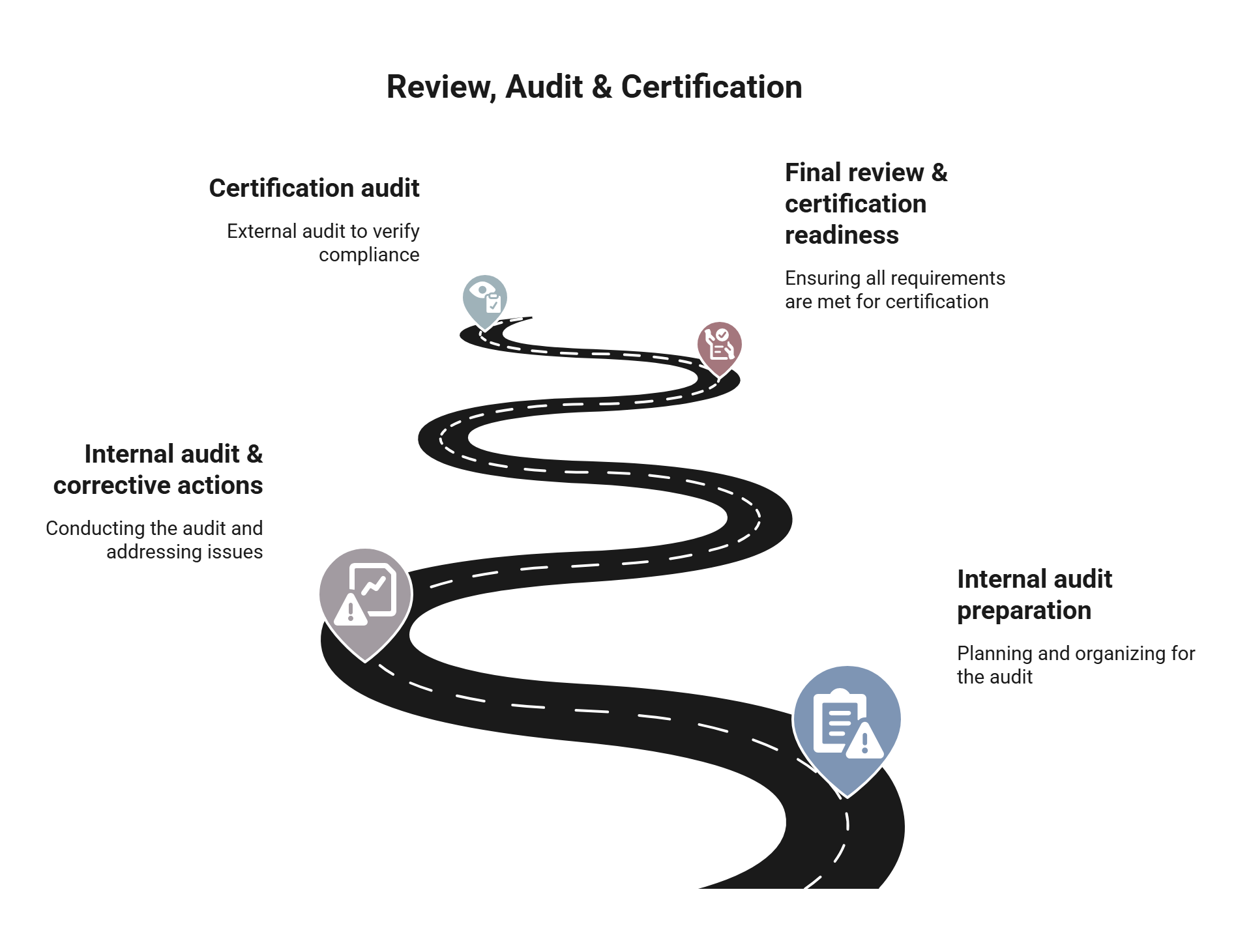

Phase 3: Review, Audit and Certification

Phase 3 prepares the organization for formal evaluation and external certification. Building on the processes established in earlier phases, this stage begins with internal audit preparation, including selecting qualified auditors, reviewing compliance evidence, and conducting pre-audit checks. A full internal audit follows, helping to identify any nonconformities and drive corrective actions. Management then conducts a formal review to assess the effectiveness of the Artificial Intelligence Management System (AIMS), address remaining gaps, and confirm readiness for certification. The phase concludes with the certification audit conducted by an accredited body. Successful completion results in ISO/IEC 42001 certification, validating the organization’s commitment to trustworthy and accountable AI practices.

To play a key role in this critical phase and lead organizations through successful audits, consider taking a course to become a Certified ISO/IEC 42001 Lead Auditor.

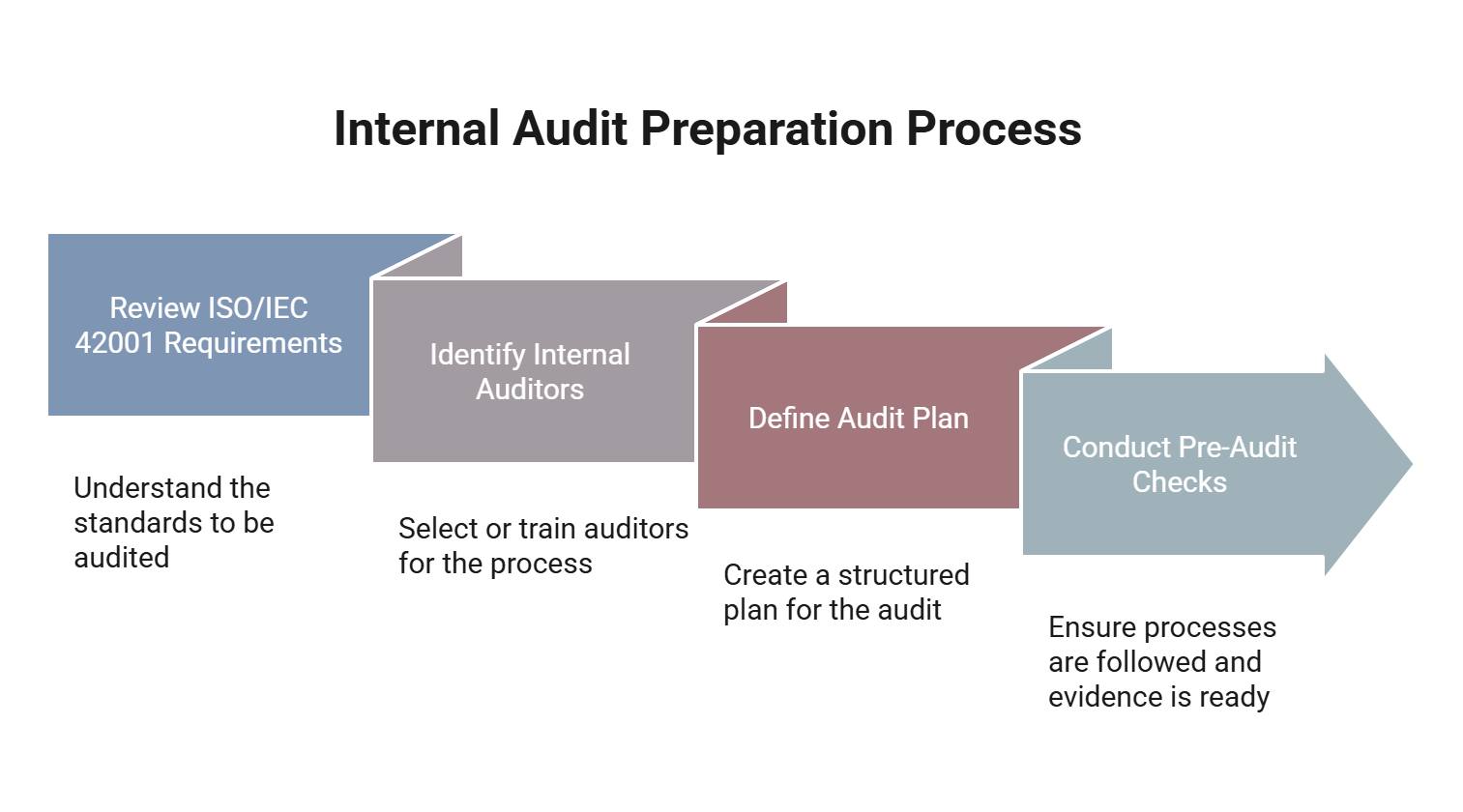

Step 9: Internal Audit Preparation

This step lays the groundwork for evaluating the effectiveness of the Artificial Intelligence Management System (AIMS) before the formal certification audit.

Organizations begin by reviewing ISO/IEC 42001 requirements and identifying qualified internal auditors—either by training existing staff or engaging external experts. An audit plan is developed to ensure comprehensive and impartial review, with auditors independent from the implementation team. Pre-audit checks are conducted to verify that processes are in place and evidence is properly documented, helping identify any gaps before the internal audit begins.

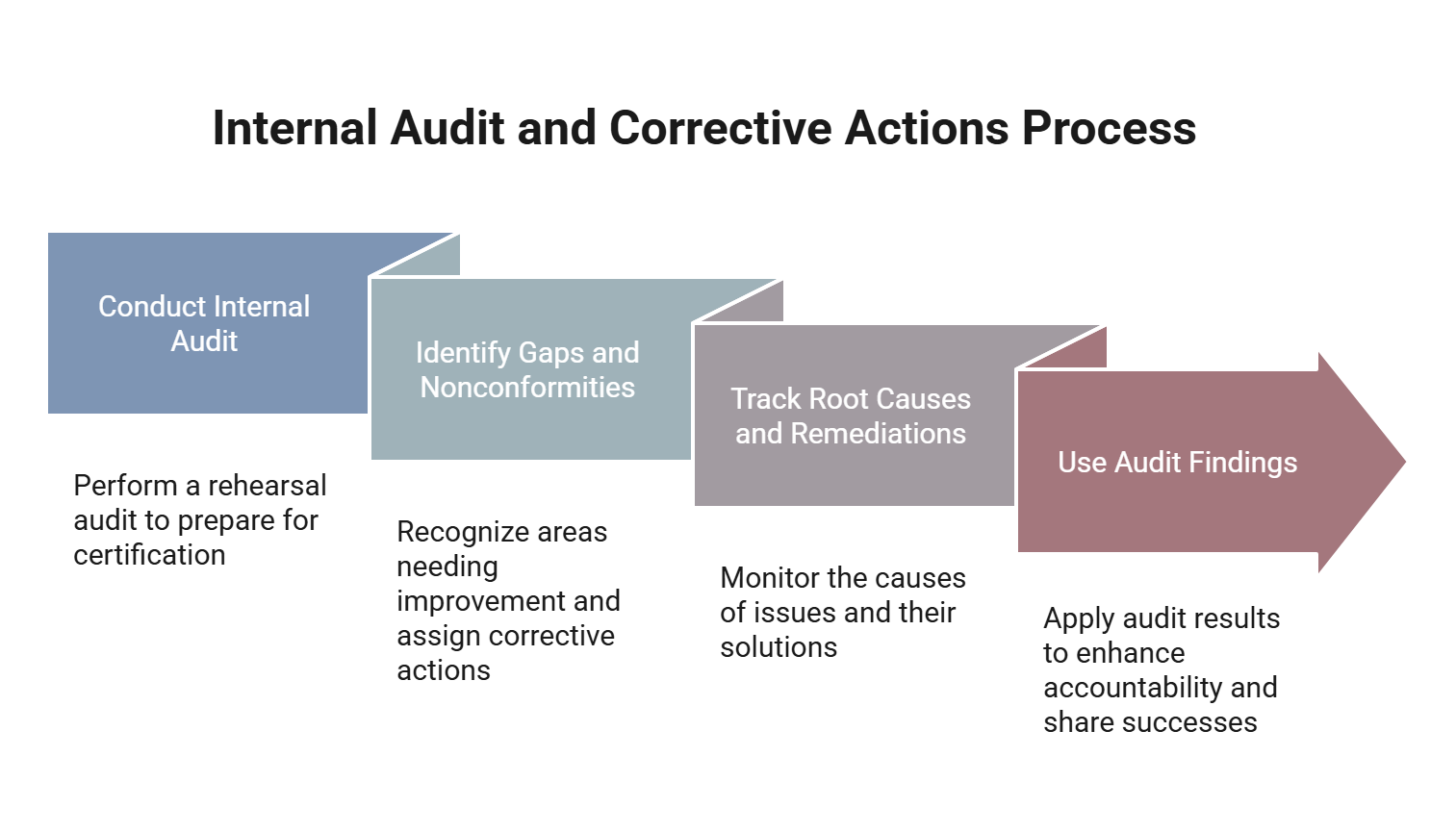

Step 10: Internal Audit and Corrective Actions

This step involves conducting a thorough internal audit to assess compliance with ISO/IEC 42001 and identify any nonconformities or areas for improvement. Audit findings are documented, and corrective actions are assigned to address root causes, not just symptoms. This step is essential for validating the effectiveness of the AIMS and ensuring that all processes are functioning as intended. It also reinforces accountability across departments and provides an opportunity to share lessons learned and highlight early successes before moving to the certification stage.

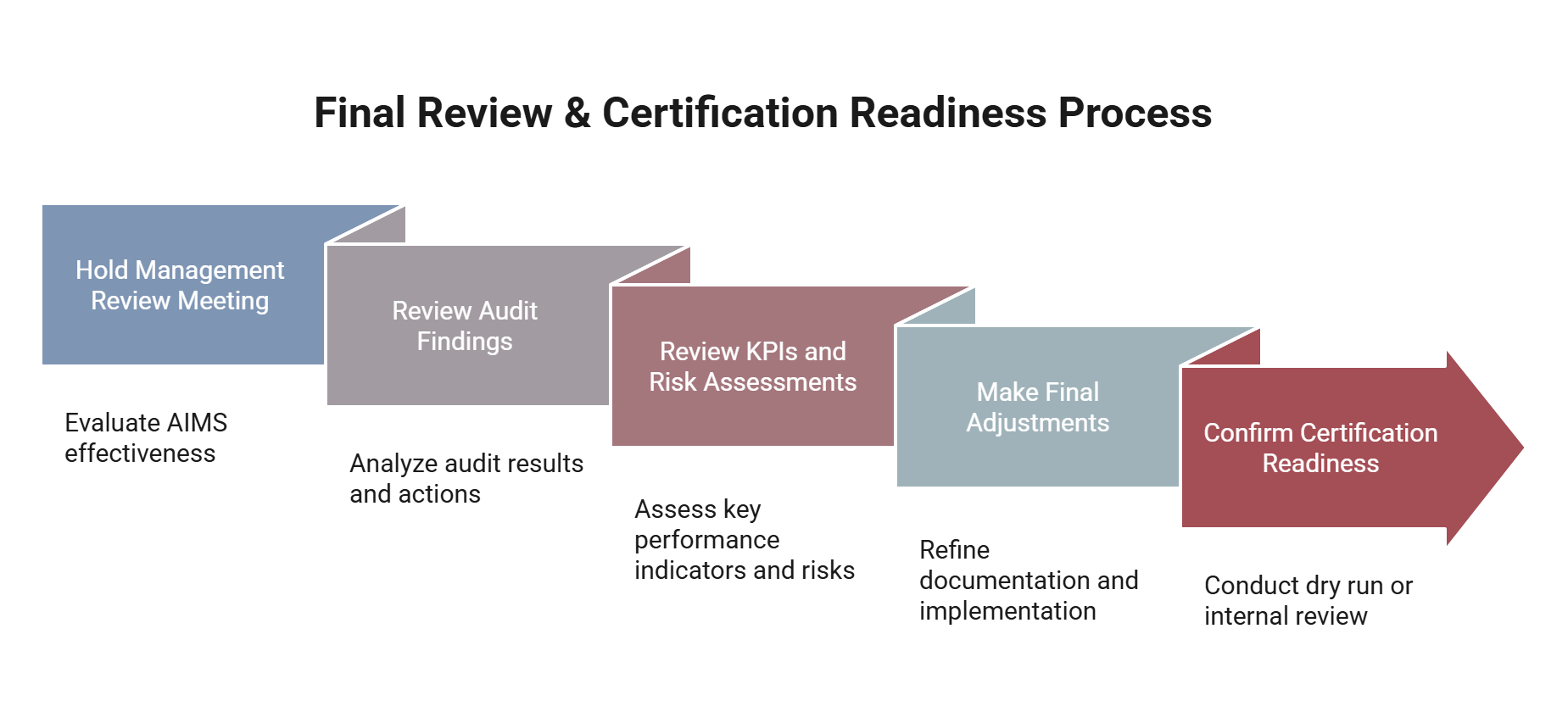

Step 11: Final Review and Certification Readiness

This is the last internal checkpoint before engaging with a certification body. In this step, the organization conducts a formal management review to evaluate the overall performance of the Artificial Intelligence Management System (AIMS). This includes reviewing audit findings, corrective actions, key performance indicators, and risk assessments. Any final adjustments to documentation or processes are made to ensure full alignment with ISO/IEC 42001 requirements. A final internal review or dry run may be conducted to confirm that the organization is fully prepared for the external certification audit.

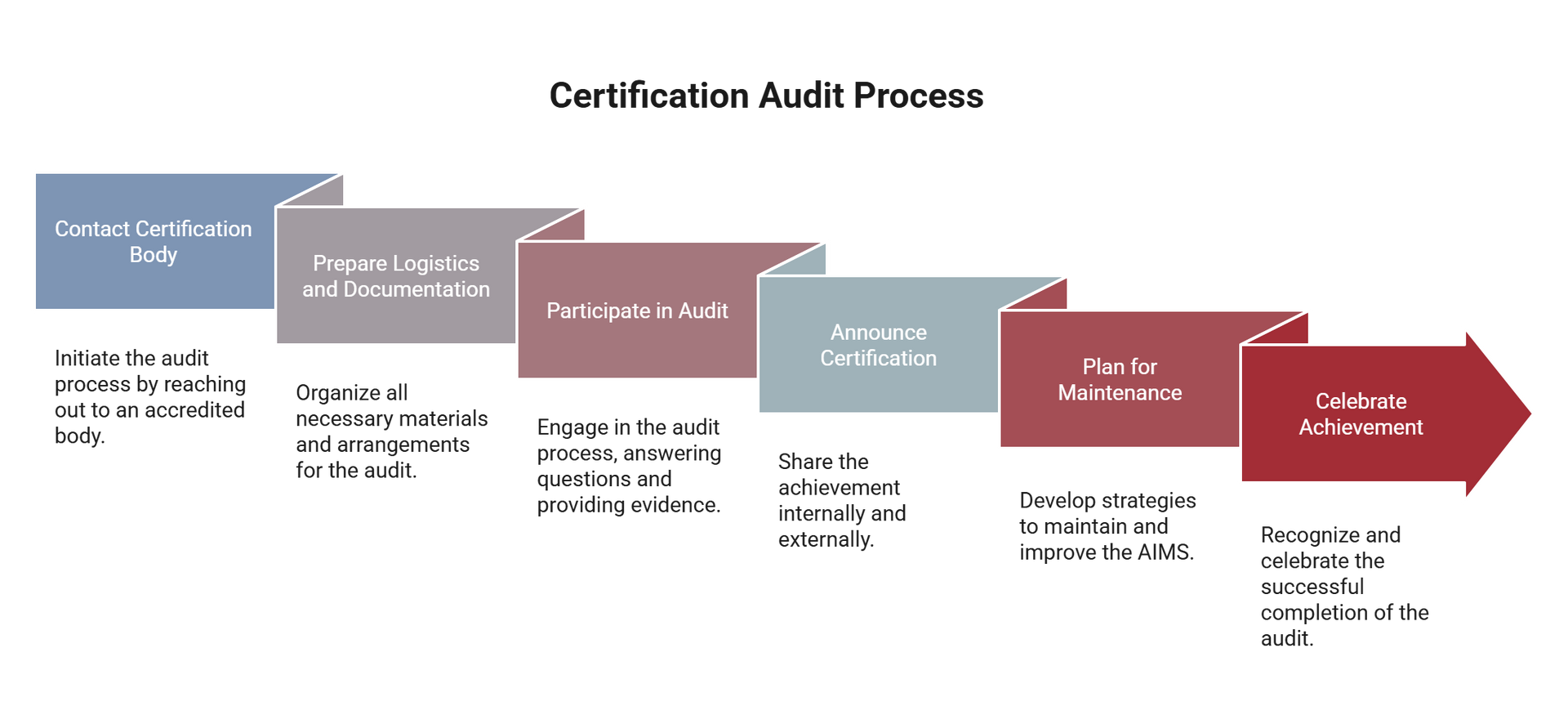

Step 12: Certification Audit

This is the final step in the ISO/IEC 42001 journey, where an accredited certification body formally assesses the organization’s AIMS for compliance. This step involves preparing documentation, scheduling audit activities, and ensuring that relevant personnel are available to support the audit process. During the audit, the organization must demonstrate how its policies, procedures, and practices align with the standard’s requirements. Any observations or minor findings are addressed promptly. Upon successful completion, the organization receives ISO/IEC 42001 certification—an important milestone that validates its commitment to responsible, ethical, and compliant AI governance.

Why ISO/IEC 42001 Certification Matters

Certification not only makes your AI systems more reliable; it also builds trust with both stakeholders and customers. It demonstrates that your AI systems are ethical, transparent, and safe. ISO/IEC 42001 provides a formalized, internationally recognized structure to prove that you’ve put the work in.

If you are leading an implementation project or preparing to assess compliance, becoming a Certified ISO/IEC 42001 Lead Implementer

or Lead Auditor

equips you with the skills and credentials to drive responsible AI practices within your organization. Enroll in one of our certification courses

and position yourself at the forefront of AI governance, risk management, and compliance.

Liked this article? Download it, free

Want a sharable version of this content to read offline or share with your team? Download this article here as a PDF white paper--completely free.

Free Download: 12 Step Roadmap

Share this article